Google is making its AI model Gemini central to its products, from Search to Android. Google is using Gemini to create new features, like more advanced Search results with “AI Overviews,” and to streamline user workflows in products like Gmail. The post also emphasizes Google’s commitment to making its AI models helpful and accessible for everyone, including using AI to improve accessibility for people with disabilities.

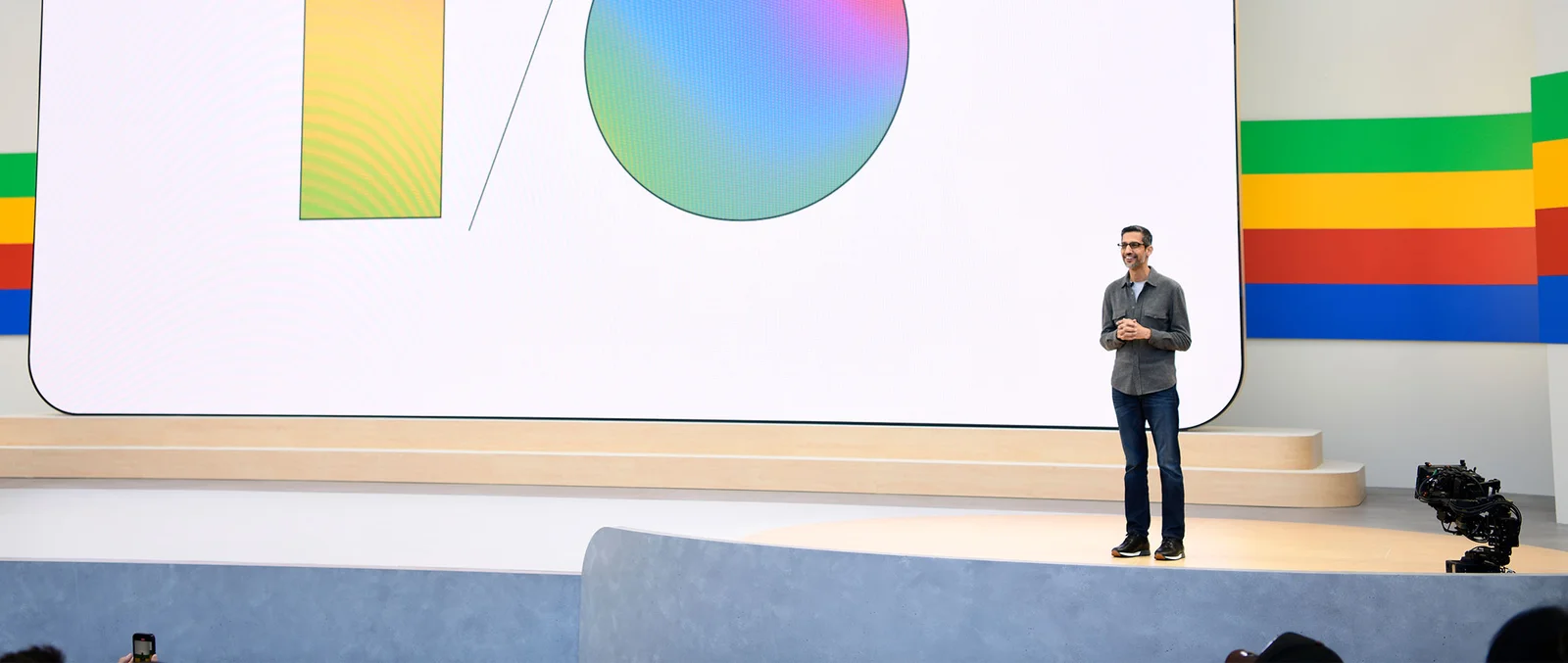

Google I/O 2024 was all about AI, specifically how Google is weaving AI into everything it does. Sundar Pichai, Google’s CEO, kicked things off with a simple message: Google is fully in its “Gemini era.” Gemini is Google’s most capable AI model to date, built to be “multimodal,” meaning it can understand and work with different types of input like text, images, video, and code.

Gemini is a big deal for Google. It’s powering new experiences in almost all of Google’s major products, including Search, Photos, Workspace, and Android. In Search, Gemini is enabling “AI Overviews,” which provide summarized answers to user queries, incorporating various perspectives from the web. This is launching to U.S. users this week.

Pichai stressed that Google is building AI for everyone, emphasizing a commitment to making AI helpful and accessible. He also highlighted how Google has been investing in AI for over a decade.

Demis Hassabis, CEO of Google DeepMind, took the stage to discuss DeepMind’s role in the Gemini era. Hassabis, a renowned AI researcher, spoke about the pursuit of “artificial general intelligence,” a system with human-level cognitive abilities.

He introduced a new version of Gemini called “Gemini 1.5 Flash.” Flash is a lighter-weight version of Gemini designed to be faster and more cost-efficient, making it ideal for tasks where speed is critical. This is available for developers globally.

The demos were impressive, showcasing Gemini’s ability to understand complex information and generate creative content. For instance, we saw a demo of an AI agent that could understand a video of a bookshelf and identify the titles and authors of books, even when the authors were not visible on the spines. Another demo showed how Gemini could be used to create personalized music with different styles and instruments.

Google also emphasized its commitment to responsible AI development. James Manyika, Google’s SVP of Technology and Society, detailed how Google is improving model safety and protecting against misuse. They are using techniques like “red-teaming,” where they test their models by trying to break them.

One of the exciting initiatives discussed was “LearnLM,” a new family of Gemini-based models specifically designed for education. Google envisions LearnLM powering AI tutors and assisting educators in the classroom.

Google I/O 2024 felt like a turning point. It’s clear that Google believes AI is the future, and they are betting big on Gemini to lead the way. The sheer scale of their investment and the ambition of their vision are impressive. They are clearly focused on integrating AI into every aspect of their product ecosystem, with the goal of making it helpful for everyone.